Fitness/Health

(51) EXTENSIVE FAMILIARIZATION IS REQUIRED BEFORE ASSESSING ACUTE CHANGES IN MULTIPLE OBJECT TRACKING PERFORMANCE

Blake W. Johnson, MS

PhD Student

University of Central Florida

Winter Park, Florida, United States

Jessica M. Moon, MS, CISSN

PhD(c)

University of Central Florida

Maitland, Florida, United States- JP

John Pinette

Research Assistant

University of Central Florida

Orlando, Florida, United States - AK

Aneesa Khwaja

Research Assistant

University of Central Florida

Orlando, Florida, United States - AF

Aubrey Fontenot

Research Assistant

University of Central Florida

Orlando, Florida, United States - VG

Violette Gibbs

Research Assistant

University of Central Florida

Orlando, Florida, United States - TD

Trevor J. Dufner

PhD(c)

University of Central Florida

Orlando, Florida, United States

Adam J. Wells, PhD

PhD

University of Central Florida

Orlando, Florida, United States

Poster Presenter(s)

Author(s)

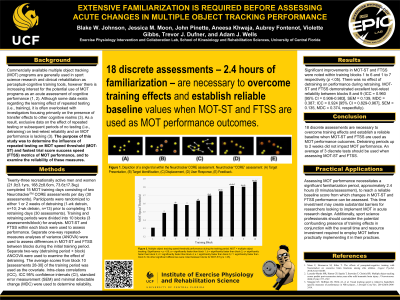

Background: Commercially available multiple object tracking (MOT) programs are generally used in sport science research and clinical rehabilitation as perceptual-cognitive training tools, however there is increasing interest for the potential use of MOT programs as an acute assessment of cognitive performance. Although some data exists regarding the learning effect of repeated testing (i.e., training), it is often overlooked with investigators focusing primarily on the presence of transfer effects to other cognitive realms. As a result, exclusive data on the effect of repeated testing or subsequent periods of no testing (i.e., detraining) on test-retest reliability and on MOT performance is lacking.

Purpose: To determine the influence of repeated testing on MOT speed threshold (MOT-ST) and fastest trial score success speed (FTSS) metrics of MOT performance, and to examine the reliability of these measures.

Methods: Twenty-three recreationally active men and women (21.9±3.1yrs, 168.2±8.6cm, 73.6±17.3kg) completed 15 MOT training days consisting of two NeurotrackerTM CORE assessments per day (30 assessments). Participants were randomized to either 1 or 2 weeks of detraining (1-wk detrain, n=10; 2-wk detrain, n=13) prior to completing 15 retraining days (30 assessments). Training and retraining periods were divided into 10 blocks (3 assessments/block) for analysis. MOT-ST and FTSS within each block were used to assess performance. Separate one-way repeated-measures analyses of variance (ANOVA) were used to assess differences in MOT-ST and FTSS between blocks during the initial training period. Separate two-way (detraining period × block) ANCOVA were used to examine the effect of detraining. The average scores from block 10 [assessments 28-30] of the training period was used as the covariate. Intra-class correlations (ICC), standard error measurement (SEM) and minimal detectable change (MDC) were used to determine reliability.

Results: Significant improvements in MOT-ST and FTSS were noted within training blocks 1 to 6 and 1 to 7 respectively (p <.05). There was no effect of detraining on performance during retraining. MOT-ST and FTSS demonstrated excellent test-retest reliability between blocks 8 and 9 (ICC = .960, SEM = .139, MDC = .387; ICC = .964, SEM = .135, MDC = .374).

Conclusions: 18 discrete assessments are necessary to overcome training effects and establish a reliable baseline when MOT-ST and FTSS are used as MOT performance outcomes. Detraining periods up to 2 weeks did not impact MOT performance. An average of 3 discrete tests should be used when assessing MOT-ST and FTSS. PRACTICAL APPLICATIONS: Assessing MOT performance necessitates a significant familiarization period, approximately 2.4 hours (8 minutes/assessment), to reach a reliable baseline score from which changes in MOT-ST and FTSS performance can be assessed. This time investment may create substantial barriers for researchers looking to implement MOT in acute research design. Additionally, sport science professionals should consider the potential confounding presence of training effects in conjunction with the overall time and resource investment required to employ MOT before practically implementing it in their practices.

Acknowledgements: None